AI Inference Market Growth Drivers, Trends, Key Players and Regional Insights by 2034

AI Inference Market Size

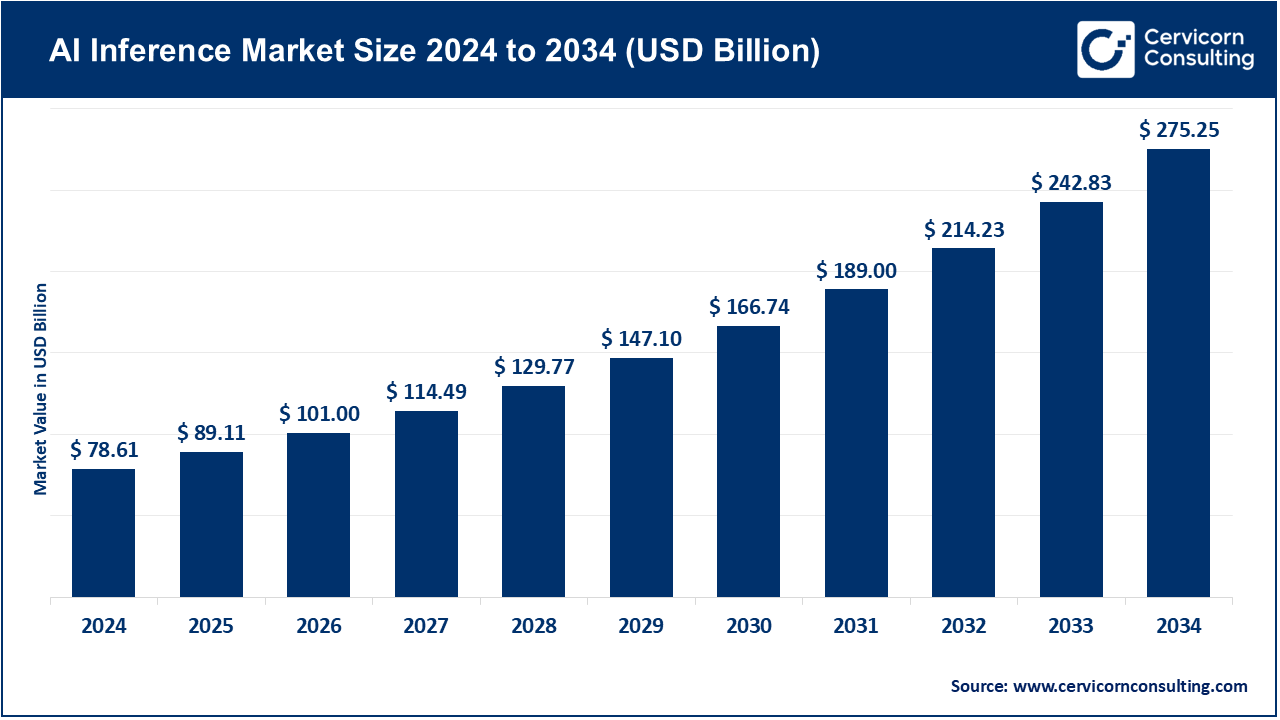

The global AI inference market size was worth USD 78.61 billion in 2024 and is anticipated to expand to around USD 275.25 billion by 2034, registering a compound annual growth rate (CAGR) of 17.5% from 2025 to 2034.

AI Inference Market — Growth Factors

The AI inference market is expanding rapidly due to several powerful drivers. The surge in generative AI and large language models (LLMs) has significantly increased demand for inference hardware and services capable of handling real-time workloads. Enterprises are moving from centralized training to hybrid architectures combining cloud and edge inference, reducing latency and bandwidth costs while enabling privacy-preserving computation. Simultaneously, hardware innovation—including GPUs, NPUs, TPUs, FPGAs, and domain-specific ASICs—has improved performance-per-watt and inference speed.

The falling cost of high-bandwidth memory, rising enterprise cloud adoption, and data-sovereignty regulations encouraging localized inference are key tailwinds. Additionally, MLOps platforms are simplifying model deployment, while government funding for AI infrastructure further accelerates market growth. Together, these factors have propelled the AI inference market into a multi-billion-dollar segment in 2024, with strong double-digit CAGR projections through the next decade.

Get a Free sample: https://www.cervicornconsulting.com/sample/2761

What is the AI Inference Market?

The AI inference market encompasses all technologies and services required to operationalize trained machine learning models. It includes the hardware (e.g., GPUs, NPUs, CPUs), cloud and edge infrastructure, software frameworks, and runtime environments that enable predictive analytics, image recognition, language understanding, or generative output in real-world applications.

Key components of the market include:

- Hardware: Specialized processors and accelerators designed for low-latency, high-efficiency inference tasks.

- Infrastructure: Data centers, cloud GPU/accelerator instances, and edge computing nodes that execute AI workloads at scale.

- Software and Middleware: Compilers, inference runtimes, quantization tools, and APIs that optimize model performance across devices.

- Vertical Solutions: Industry-specific inference services for healthcare, finance, retail, manufacturing, and autonomous systems.

In 2024, the global AI inference market was valued between USD 76 billion and USD 98 billion, depending on the inclusion of hardware, software, and services. It is expected to sustain robust growth due to the accelerating integration of AI into products and enterprise workflows.

Why the AI Inference Market is Important

Inference is the stage where AI transitions from research to practical impact. Every AI-powered application—from recommendation engines and fraud detection to self-driving cars and voice assistants—relies on inference to process data and generate intelligent responses in real time.

While model training occurs periodically and is computationally intensive, inference happens continuously, often millions of times per second across distributed systems. Optimizing inference efficiency is therefore critical for cost control and user experience. Inference determines how fast and affordably organizations can deliver AI-driven personalization, automation, and decision-making.

The market’s importance is also strategic: inference sits at the intersection of semiconductor design, cloud infrastructure, software engineering, and regulatory compliance. Enterprises depend on efficient inference pipelines to scale AI adoption, while nations view it as essential to competitiveness and data sovereignty. In short, inference is where artificial intelligence delivers its value to the real world.

Top Companies in the AI Inference Market

Below is an overview of the leading companies shaping the AI inference market, highlighting their specialization, focus areas, notable features, 2024 performance, market share, and global presence.

Amazon Web Services, Inc. (AWS)

Specialization: Cloud-based AI infrastructure and managed inference services.

Key Focus Areas: AWS SageMaker endpoints, Inferentia and Trainium accelerators, Elastic Inference, and edge inference via AWS IoT Greengrass.

Notable Features: AWS provides deep integration across its ecosystem, offering seamless scalability and cost optimization through custom inference chips (Inferentia). Its managed services allow developers to deploy, monitor, and scale AI models easily.

2024 Revenue: AWS’s total cloud segment generated approximately USD 108 billion in 2024, maintaining its dominance in cloud computing and AI services.

Market Share / Global Presence: AWS leads the global cloud inference landscape, with a presence in over 30 regions worldwide. Its vertically integrated ecosystem and custom silicon give it a strong position in enterprise AI adoption.

Arm Limited

Specialization: Processor IP licensing for CPUs, GPUs, and NPUs widely used in mobile and edge devices.

Key Focus Areas: Power-efficient AI cores, neural processing units for smartphones, IoT inference optimization, and embedded AI enablement.

Notable Features: Arm’s architecture powers billions of devices, forming the backbone of mobile AI inference. Its designs enable on-device intelligence in smartphones, cameras, wearables, and embedded systems.

2024 Revenue: Around USD 4 billion in annual revenue, largely from royalties and licensing.

Market Share / Global Presence: Arm’s technology is integrated into the vast majority of mobile chips, giving it unmatched reach in the global edge and IoT inference ecosystem.

Advanced Micro Devices, Inc. (AMD)

Specialization: High-performance CPUs and GPUs for AI training and inference workloads.

Key Focus Areas: Data-center AI accelerators (Instinct MI300 series), EPYC CPUs optimized for inference, and software frameworks for model deployment.

Notable Features: AMD’s MI300 accelerators have gained significant traction among hyperscalers for cost-efficient inference. The company emphasizes open software ecosystems and power-efficient data-center solutions.

2024 Revenue: The data-center AI division contributed over USD 5 billion in annual revenue, reflecting growing adoption of AMD accelerators.

Market Share / Global Presence: AMD is rapidly expanding its market share in inference hardware, serving major cloud providers and enterprise customers globally.

Google LLC

Specialization: Cloud AI services and custom hardware (TPUs) for large-scale inference.

Key Focus Areas: Google Cloud’s Vertex AI platform, TPU pods, Gemini model family integration, and AI-driven enterprise services.

Notable Features: Google’s Tensor Processing Units (TPUs) are purpose-built for inference and training, enabling ultra-efficient performance for LLMs and image models. Its advanced AI stack supports model deployment, monitoring, and optimization.

2024 Revenue: Google Cloud generated roughly USD 12 billion per quarter in 2024, driven by growing enterprise adoption of AI infrastructure.

Market Share / Global Presence: Google Cloud ranks among the top three global providers of inference services, with a strong footprint in North America, Europe, and Asia-Pacific.

Intel Corporation

Specialization: General-purpose CPUs, AI accelerators, and inference-optimized hardware.

Key Focus Areas: Xeon CPUs for inference, Habana Labs Gaudi accelerators, and OpenVINO toolkit for model optimization.

Notable Features: Intel leverages its massive enterprise installed base and open-source optimization tools to retain relevance in inference workloads. Its heterogeneous compute strategy integrates CPUs, GPUs, and accelerators for AI tasks.

2024 Revenue: Intel reported approximately USD 53 billion in total annual revenue.

Market Share / Global Presence: Intel remains a major inference provider, particularly in on-premise and enterprise data centers, with a presence spanning every major geographic region.

Leading Trends and Their Impact

1. Model Efficiency and Compression

As models grow larger, there’s an increasing emphasis on techniques such as quantization, pruning, and distillation to reduce model size without compromising accuracy. This trend allows inference to occur on smaller, energy-efficient hardware, enabling real-time intelligence on devices like smartphones, sensors, and IoT systems.

Impact: Lower inference costs, faster response times, and broader AI accessibility at the edge.

2. Heterogeneous Computing and Interoperability

The market is moving toward heterogeneous architectures, combining CPUs, GPUs, NPUs, and FPGAs. Frameworks like ONNX Runtime, TensorRT, and OpenVINO promote interoperability across devices, reducing vendor lock-in and optimizing workloads dynamically.

Impact: Developers gain flexibility, and competition among chipmakers intensifies, driving faster innovation.

3. Custom Silicon for Inference

Companies are increasingly designing domain-specific inference chips—like Google TPUs, AWS Inferentia, and Intel Habana accelerators—to achieve better cost-performance ratios.

Impact: Custom silicon lowers operational costs and energy usage, supporting massive deployment of AI-driven services such as real-time translation, image recognition, and content moderation.

4. Edge and On-Device Inference

Inference is progressively moving closer to the data source. Smartphones, surveillance systems, and industrial equipment now include AI processors for low-latency, privacy-preserving inference.

Impact: Edge inference reduces reliance on cloud connectivity, supports compliance with data privacy laws, and enables instant decision-making for applications like autonomous driving and medical diagnostics.

5. Managed Inference Platforms and MLOps

Enterprises are adopting managed model-serving platforms and MLOps pipelines to deploy, scale, and monitor inference workloads efficiently. These platforms automate model updates, canary testing, and autoscaling to ensure consistent performance.

Impact: Reduced operational complexity, faster AI deployment, and higher ROI for enterprise AI projects.

6. Regulatory and Ethical Pressures

Governments worldwide are implementing AI regulations that emphasize explainability, fairness, and data privacy. This encourages localized inference—running AI models within regional data centers or on-premise systems—to comply with legal frameworks.

Impact: Increased demand for region-specific cloud infrastructure and secure, explainable inference systems.

Successful AI Inference Implementations Worldwide

- E-commerce Personalization: Global online retailers use real-time inference to generate personalized product recommendations, driving higher engagement and conversion rates.

- Conversational AI: Virtual assistants such as Alexa, Siri, and Google Assistant process millions of voice commands daily using scalable inference clusters optimized for speech recognition and intent understanding.

- On-Device Vision: Arm-based smartphones use on-device NPUs for features like facial recognition, AR filters, and image enhancement—all performed locally for privacy and speed.

- Autonomous Systems: Automotive and robotics companies deploy inference stacks at the edge to enable perception, navigation, and object detection, ensuring safety-critical decision-making in milliseconds.

- Healthcare Diagnostics: Hospitals use local inference servers to analyze medical images and pathology slides rapidly, reducing diagnostic turnaround times while complying with data protection regulations.

These implementations illustrate how AI inference drives transformation across diverse industries—combining real-time processing, cost efficiency, and scalability to unlock new possibilities.

Global and Regional Analysis

North America

North America dominates the AI inference market, led by the United States. Major cloud providers (AWS, Google Cloud, Microsoft) and semiconductor leaders (NVIDIA, AMD, Intel) operate large-scale inference infrastructure. U.S. government policies and funding programs promote AI innovation, ethical deployment, and domestic compute manufacturing. Canada, meanwhile, continues to invest in research-focused AI inference projects, particularly in healthcare and academic applications.

Europe

Europe’s AI inference market is shaped by the EU AI Act, which enforces stringent regulations on data privacy, explainability, and risk classification. These policies push enterprises toward local inference and transparent AI models. The region’s leading economies—Germany, France, and the U.K.—are investing heavily in AI R&D and cloud infrastructure, while fostering partnerships between academia and industry to develop energy-efficient inference solutions.

Asia-Pacific

Asia-Pacific represents the fastest-growing regional market. China has implemented aggressive industrial policies to achieve chip self-sufficiency and AI leadership. Government-backed programs fund the development of domestic inference accelerators and AI data centers. Japan, South Korea, and Singapore focus on smart infrastructure and robotics, promoting localized inference solutions for public safety, logistics, and healthcare. India’s “AI for All” strategy encourages public-sector AI applications and supports startups building affordable inference platforms for agriculture, education, and health services.

Middle East & Africa

Countries such as the United Arab Emirates and Saudi Arabia are positioning themselves as AI hubs through strategic investments in data centers and AI infrastructure. National AI strategies emphasize public-private partnerships to attract cloud providers and research institutions. In Africa, emerging economies are prioritizing edge AI for agriculture, telecom, and healthcare applications, leveraging cost-effective inference models to overcome connectivity challenges.

Latin America

Latin America’s AI inference market is gradually expanding, with Brazil, Mexico, and Chile leading adoption. Regional governments are establishing AI ethics frameworks and encouraging data localization. The private sector is focusing on using inference technologies for financial services, smart cities, and industrial automation.

Government Initiatives and Policies Shaping the Market

Governments worldwide are shaping the AI inference landscape through funding, regulation, and infrastructure development:

- United States: National AI strategies and executive orders promote AI R&D funding, domestic chip manufacturing, and safe AI deployment.

- European Union: The EU AI Act enforces robust governance frameworks, encouraging transparent and explainable inference models.

- China: The “Next Generation AI Development Plan” and subsidies for domestic AI chipmakers aim to establish technological independence.

- India: The “National AI Mission” promotes AI innovation for social good, with a focus on edge inference for public services.

- Japan and South Korea: Initiatives support AI infrastructure expansion, energy-efficient chips, and edge computing networks.

- Middle East: National AI strategies (UAE, Saudi Arabia) incentivize global tech companies to establish inference infrastructure locally.

These coordinated policy efforts not only expand the AI inference market but also influence how and where models are deployed—balancing performance, sovereignty, and compliance.

To Get Detailed Overview, Contact Us: https://www.cervicornconsulting.com/contact-us

Read Report: Biohydrogen Market Trends, Growth Drivers and Leading Companies 2024