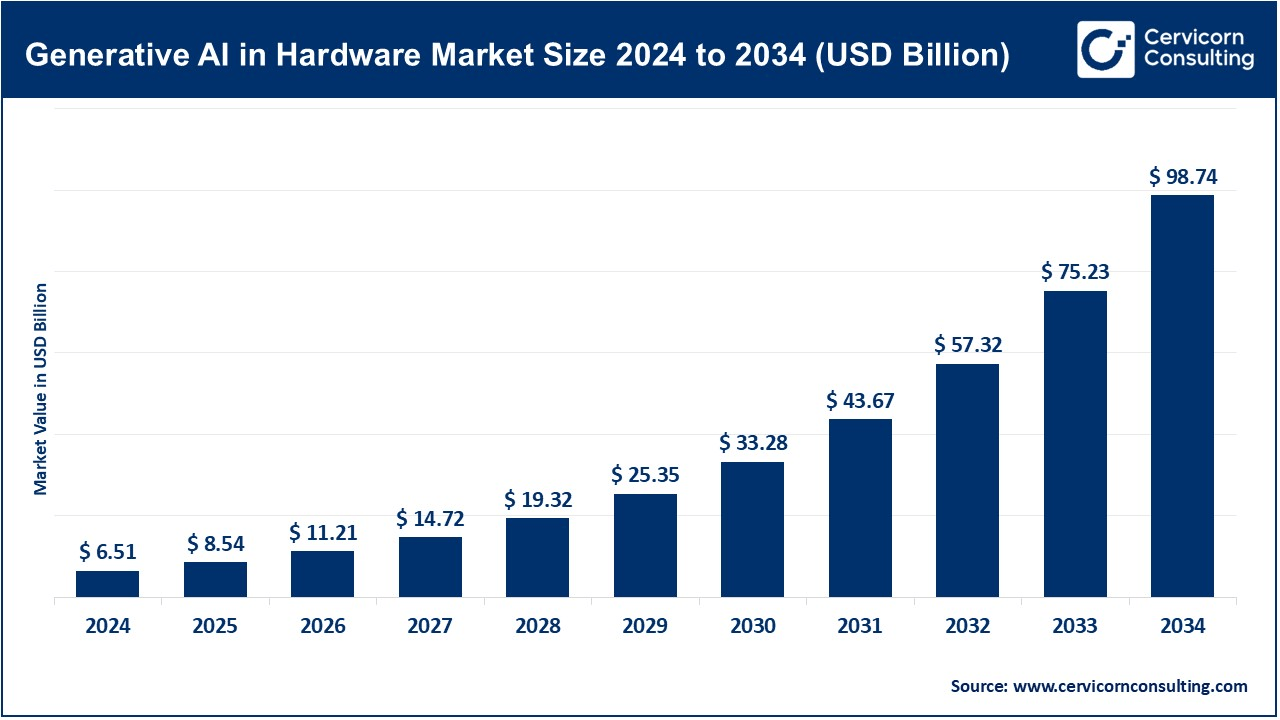

Generative AI in Hardware Market Size to Reach USD 98.74 Billion by 2034

Generative AI in Hardware Market Size

The global generative AI in hardware market Size was worth USD 6.51 billion in 2024 and is anticipated to expand to around USD 98.74 billion by 2034, registering a compound annual growth rate (CAGR) of 31.24% from 2025 to 2034.

What Is Generative AI in the Hardware Market?

The generative AI hardware market refers to the ecosystem of specialized hardware components developed to support AI systems capable of generating new and original content. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Neural Processing Units (NPUs), and custom AI chips. Designed specifically to meet the intensive data-processing demands of generative models like OpenAI’s GPT-4, Google’s PaLM, and Meta’s LLaMA, these components deliver the speed, precision, and efficiency necessary for both training and inference stages.

Unlike traditional computing, where CPUs dominate, generative AI workloads rely on parallel processing and vector computations—tasks better suited to GPUs and other dedicated accelerators. As the complexity and size of AI models increase, so too does the demand for hardware that can keep up with exponential computational growth. From cloud-based data centers to edge devices, generative AI hardware plays a pivotal role in unlocking the potential of intelligent systems.

Why Is Generative AI Hardware Important?

The significance of hardware in the generative AI landscape cannot be overstated. While software models make AI intelligent, hardware makes AI possible. Here’s why it matters:

-

High-Performance Computing: Generative models, particularly large language models (LLMs) and diffusion-based image generators, require massive computational resources. Specialized hardware ensures faster training cycles and real-time inference.

-

Energy Efficiency: AI training can consume enormous amounts of energy. AI-specific chips are designed for efficiency, reducing power usage and operational costs—especially in hyperscale data centers.

-

Scalability: As organizations deploy AI across various business functions, scalable and modular hardware architectures allow infrastructure to grow in tandem with demand.

-

Real-Time Processing: Edge AI devices powered by NPUs or custom silicon enable real-time decision-making in critical applications like autonomous vehicles, surveillance, and healthcare.

-

Competitive Advantage: Faster, more efficient hardware shortens development timelines and improves the performance of AI-powered products and services, giving companies a strategic edge.

Generative AI in Hardware Market Growth Factors

The generative AI hardware market is experiencing exponential growth, fueled by the rapid rise of AI-powered applications across industries including healthcare, finance, manufacturing, and entertainment. The increasing complexity of generative models and their wide-ranging utility—from synthetic media to drug discovery—necessitates robust, energy-efficient hardware solutions capable of handling massive parallel computations. Ongoing advancements in chip architectures, increased capital investments in AI-focused startups, and the global race for AI leadership are driving demand for specialized hardware accelerators. Moreover, the integration of AI capabilities into consumer devices and the expansion of cloud AI infrastructure further accelerate the market’s trajectory, making AI hardware a cornerstone of digital transformation strategies worldwide.

Get a Free Sample: https://www.cervicornconsulting.com/sample/2641

Top Companies in the Generative AI Hardware Market

| Company | Specialization | Key Focus Areas | Notable Features | Market Share |

|---|---|---|---|---|

| NVIDIA | AI GPUs and Data Center Solutions | AI/ML model training and inference | CUDA ecosystem, Tensor Cores, H100 chips | ~90% in AI GPU market |

| AMD | High-performance Computing and Graphics | AI-enhanced GPUs and CPUs | RDNA/CDNA architectures, MI300 AI chip | Growing market share |

| Intel | Semiconductors, AI Chips, Edge AI | Xeon CPUs, Habana AI accelerators | AI-optimized cores, Intel Gaudi chips | Competing with AMD/NVIDIA |

| Google (TPU) | Custom AI Hardware for Cloud | TensorFlow-optimized TPUs | Edge TPU, TPU v4, ML workloads at scale | Dominant in internal use |

| Apple (Neural Engine) | On-device AI Hardware | Neural Engine in M-series chips | Face ID, on-device Siri, Health apps | Widely used in Apple devices |

Generative AI in Hardware Market Leading Trends and Their Impact

1. Custom Silicon for AI

Companies are increasingly designing custom AI chips tailored for specific workloads. Apple’s Neural Engine and Google’s TPUs are prime examples. This trend enables optimized performance, lower latency, and better integration with software platforms, setting a new standard for AI computation.

Impact: Boosts performance efficiency, reduces reliance on third-party hardware providers, and allows vertical integration of AI stacks.

2. AI at the Edge

The demand for real-time decision-making in devices like smartphones, drones, and autonomous vehicles is fueling growth in edge AI chips. Apple’s Neural Engine and Google’s Edge TPU enable localized AI processing, ensuring low latency and better privacy.

Impact: Decentralizes AI computation, reduces data center load, and enhances user experience with real-time responsiveness.

3. AI-Optimized Data Centers

Hyperscale cloud providers are investing in AI-specific infrastructure, including NVIDIA’s DGX systems and Google’s TPU clusters. These setups are designed to train massive generative models efficiently and cost-effectively.

Impact: Lowers training time, expands access to generative AI capabilities, and drives demand for specialized chips.

4. Green AI Initiatives

Given the high energy requirements of AI workloads, companies are exploring energy-efficient chip architectures. NVIDIA, Intel, and AMD are introducing power-optimized solutions, aligning with sustainability goals.

Impact: Reduces carbon footprint and improves regulatory compliance, making AI adoption more environmentally friendly.

5. Chiplet-Based Architecture

Instead of a single monolithic die, chiplet architecture divides functions across smaller chips integrated into a single package. AMD’s EPYC and MI300 leverage this approach.

Impact: Enables better yields, performance scaling, and modular upgrades without full redesigns.

Successful Examples of Generative AI in the Hardware Market

1. NVIDIA’s H100 Tensor Core GPU

The H100 GPU, designed for generative AI and deep learning, supports large model training with unprecedented performance. It’s powering GPT, Stable Diffusion, and numerous enterprise applications.

2. Apple’s Neural Engine

Embedded in M1 and M2 chips, the Neural Engine accelerates on-device processing for AI tasks, enabling real-time features like facial recognition and enhanced camera processing without relying on cloud connectivity.

3. Google’s TPU v4 Pods

These custom-built accelerators are optimized for Google’s AI workloads, supporting large-scale generative models like PaLM and Bard. TPU v4 achieves 4x the performance per watt compared to its predecessor.

4. Intel Gaudi2 AI Processors

Part of Intel’s Data Center GPU lineup, Gaudi2 accelerators offer scalable solutions for generative model training with a focus on efficiency, making them viable competitors to NVIDIA in AI data centers.

5. AMD Instinct MI300 Series

Targeted for data center AI workloads, the MI300 series combines CPU and GPU functionalities for high-performance generative model training, particularly in cloud and enterprise environments.

Global Regional Analysis Including Government Initiatives and Policies

United States

The U.S. remains a global leader in AI hardware innovation, supported by major players like NVIDIA, Intel, and AMD. The CHIPS and Science Act, which allocates over $50 billion to semiconductor R&D and manufacturing, aims to strengthen domestic chip production and reduce reliance on foreign suppliers. Additionally, the National AI Initiative encourages public-private partnerships to advance AI hardware technologies.

European Union

The EU emphasizes ethical and sustainable AI development. Through programs like Horizon Europe and Digital Europe, billions are being invested into AI and HPC (High-Performance Computing) infrastructure. The European Processor Initiative (EPI) also aims to develop indigenous AI-capable processors to reduce dependency on U.S. and Asian technologies.

China

China is rapidly scaling up its AI capabilities with significant government backing. Policies like “New Generation Artificial Intelligence Development Plan” include heavy investments in AI chip startups, data centers, and generative AI platforms. Firms like Huawei and Alibaba are actively developing AI accelerators to compete globally.

India

India’s National AI Strategy (NITI Aayog) focuses on AI for social good, but hardware development is still emerging. Initiatives like Semicon India aim to bolster semiconductor design and manufacturing to support AI development domestically.

Japan and South Korea

Both nations are leveraging their semiconductor expertise to enter the generative AI hardware space. Japan’s AI Bridging Cloud Infrastructure (ABCI) and South Korea’s support for Samsung and SK Hynix in AI chip innovation highlight their ambitions to become AI hardware leaders.

Middle East

Countries like the UAE and Saudi Arabia are investing heavily in AI and cloud infrastructure as part of their Vision 2030 initiatives. Investments include establishing AI-focused data centers and supporting the development of custom chip solutions for regional applications.

To Get Detailed Overview, Contact Us: https://www.cervicornconsulting.com/contact-us

Read Report: Asset Lifecycle Management Market Size & Share Report – 2034