AI Infrastructure Market Size

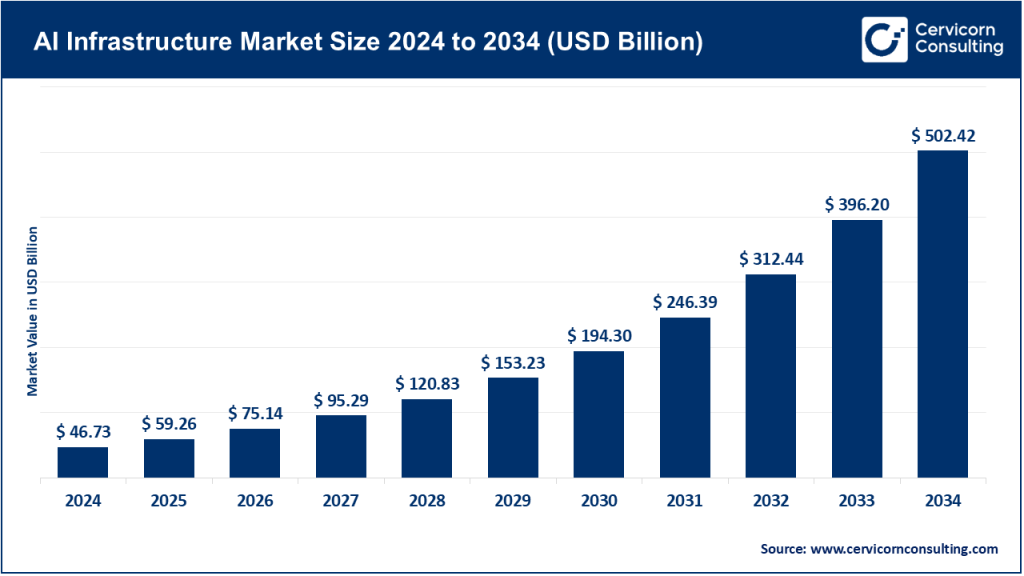

The global AI infrastructure market size was worth USD 46.73 billion in 2024 and is anticipated to expand to around USD 502.42 billion by 2034, registering a compound annual growth rate (CAGR) of 26.81% from 2025 to 2034.

What is the AI Infrastructure Market?

AI infrastructure refers to the ecosystem of hardware, software, and services required to build, train, fine-tune, and deploy AI models efficiently and securely. It forms the technological foundation for modern AI development.

Key components include:

- Compute: Specialized processors such as GPUs, TPUs, and AI accelerators designed for large matrix computations and neural network training.

- Storage and Data Pipelines: High-speed data systems that support massive parallel processing and manage the large volumes of structured and unstructured data used in AI workflows.

- Networking: Low-latency, high-bandwidth networks such as InfiniBand and NVLink that connect compute nodes to handle distributed training.

- Cloud and On-Premises Platforms: Hybrid environments offering scalable resources for both training and inference.

- Software and Orchestration: Frameworks like TensorFlow, PyTorch, and MLOps platforms that automate data preparation, model management, and deployment.

- Security and Compliance: Tools and protocols for privacy, encryption, model safety, and adherence to regional regulations.

Estimates vary, but analysts generally value the AI infrastructure market in the tens to hundreds of billions of dollars in 2024, with double-digit growth rates projected through the next decade. This growth reflects not only cloud spending but also investments in data centers, hardware, and AI-specific design tools.

Get a Free Sample: https://www.cervicornconsulting.com/sample/2786

AI Infrastructure Market — Growth Factors

The AI infrastructure market is expanding rapidly due to the surge in compute-intensive workloads driven by foundation models and generative AI. Hyperscale cloud providers are investing heavily in GPUs, accelerators, and custom silicon to meet this demand. Enterprises across industries are integrating AI into workflows, fueling demand for scalable cloud and hybrid infrastructure. The falling cost of high-performance chips, advances in power efficiency, and greater availability of AI-optimized hardware are making large-scale training and inference more affordable. Governments are also investing in national AI compute capabilities, driving public-private partnerships and new supercomputing projects.

Additionally, AI-as-a-Service offerings and turnkey infrastructure solutions are lowering the barrier to entry for smaller firms, while robust supply chains for memory, networking, and packaging are enabling broader deployment. Together, these forces are accelerating both near-term adoption and long-term capital investment in AI infrastructure worldwide.

Why AI Infrastructure Is Important

AI models rely on vast amounts of computation and data. Without the right infrastructure, these models are slow, expensive, and difficult to deploy at scale. AI infrastructure ensures that organizations can train large models efficiently, serve predictions in real time, and maintain performance consistency.

For businesses, AI infrastructure directly influences innovation and competitiveness. A well-designed infrastructure lowers costs, improves energy efficiency, and speeds up development cycles. It also supports data governance and compliance — essential for meeting the growing demands of ethical and regulated AI deployment. As enterprises build AI capabilities into their products and services, infrastructure becomes both a productivity enabler and a strategic differentiator.

AI Infrastructure Market — Top Companies

Below is an overview of the key companies driving the AI infrastructure landscape, detailing their core strengths, specializations, and market standing in 2024.

| Company | Specialization | Key Focus Areas | Notable Features | 2024 Revenue (Approx.) | Market Share / Position | Global Presence |

|---|---|---|---|---|---|---|

| Amazon Web Services (AWS) | Cloud infrastructure (IaaS/PaaS) | Elastic GPU instances, SageMaker, Bedrock, edge computing | Global data centers, mature MLOps tools, large partner network | $107.6 B | Leading cloud provider (~30% of global market) | Worldwide – largest hyperscale presence across North America, Europe, and APAC |

| Google (Google Cloud / GCP) | Cloud and AI platform | Vertex AI, TPUs, data analytics, generative AI services | Deep AI integration, custom TPU chips, secure infrastructure | $43.2 B | ~10–12% of global cloud market, fastest growth in AI workloads | Global – expanding specialized AI regions and data centers |

| NVIDIA | GPUs and AI accelerators | Data-center GPUs, CUDA software stack, DGX systems | Dominant AI hardware provider, integrated hardware-software ecosystem | $60.9 B | ~80–90% of data-center GPU market | Global – major supplier to hyperscalers, OEMs, and research institutes |

| Cadence Design Systems | Electronic design automation (EDA) and AI-assisted chip design | AI-driven design workflows, IP libraries, verification tools | Enables custom AI chip design and optimization | $4.6 B | Leading EDA vendor, indirect but critical in AI hardware design | Global – strong presence in the U.S., Asia, and Europe |

| Advanced Micro Devices (AMD) | CPUs and GPUs for data centers | EPYC processors, Instinct accelerators, custom silicon | Competitive alternative to NVIDIA, strong CPU-GPU portfolio | $25.8 B | Smaller but fast-growing share of data-center GPU market | Global – partnerships with major OEMs and cloud platforms |

These companies form the backbone of the AI infrastructure ecosystem. While NVIDIA dominates hardware acceleration, AWS and Google lead cloud-based AI infrastructure, AMD provides competitive diversification, and Cadence plays a foundational role in chip design automation.

Leading Trends and Their Impact

1. Foundation Models and Specialized Accelerators

The demand for training massive foundation models has made GPU and accelerator supply a key bottleneck. Vendors are racing to produce chips with higher memory bandwidth, better interconnects, and improved efficiency. NVIDIA’s Blackwell and AMD’s Instinct series illustrate how performance innovations are shaping AI economics, while hyperscalers like Google are deploying their own custom silicon to control costs and performance.

2. Cloud and Hybrid Consumption Models

Most enterprises are adopting hybrid strategies — using cloud for flexible, large-scale training and keeping inference or sensitive workloads on-premises for security and latency reasons. Managed AI services like SageMaker and Vertex AI are simplifying AI adoption, allowing companies to deploy infrastructure without deep in-house expertise.

3. AI Factories and Superclusters

Governments and corporations are building “AI factories” — massive supercomputing facilities optimized for AI workloads. These projects, often worth billions of dollars, combine specialized hardware, energy-efficient cooling, and software orchestration to train frontier models and national AI systems. They represent a shift toward localized, sovereign compute capacity.

4. MLOps and Software Standardization

As AI deployment scales, managing the lifecycle of models — from training to monitoring — becomes essential. MLOps tools are evolving to offer reproducibility, cost control, and compliance management. Vendors that integrate hardware with comprehensive software stacks are gaining market share by simplifying complex workflows.

5. Energy Efficiency and Sustainability

Training large models requires enormous power and cooling resources. As data centers expand, sustainability has become a central design criterion. Companies are investing in liquid cooling, renewable energy sourcing, and energy-efficient architectures to reduce operational costs and meet environmental goals.

6. Edge AI and Low-Power Accelerators

With AI increasingly embedded in devices and industrial systems, demand for low-power inference chips is surging. This trend complements, rather than replaces, the growth in large-scale data-center AI. It also drives innovation in semiconductor design for automotive, robotics, and IoT applications.

7. Supply Chain and Geopolitical Realignment

Export controls, semiconductor supply constraints, and regional policies are reshaping the global AI infrastructure supply chain. Countries are investing in domestic chip fabrication and local data centers to reduce dependency on foreign technology. This regional diversification is both a challenge and an opportunity for vendors.

Successful Examples of AI Infrastructure Deployments

NVIDIA DGX SuperPODs in Research and Industry

NVIDIA’s DGX SuperPODs have been deployed across pharmaceutical, automotive, and academic sectors to accelerate AI research. These integrated systems deliver petaflop-scale performance out of the box, enabling organizations to train complex models in weeks rather than months.

AWS and Google Cloud AI Platforms

AWS has expanded its GPU instance types and launched Bedrock and SageMaker JumpStart to streamline generative AI deployment. Google Cloud’s Vertex AI platform offers a fully managed environment with native support for custom and foundation models. These hyperscale platforms have democratized access to advanced AI infrastructure, allowing even small enterprises to build and deploy AI applications globally.

European AI Supercomputing Initiatives

The European Union is investing in large-scale AI superclusters and “AI gigafactories” designed to support regional innovation and reduce reliance on non-EU compute resources. These projects aim to bolster research, foster industrial AI, and ensure data sovereignty under the EU’s regulatory framework.

Cadence in AI-Driven Chip Design

Cadence Design Systems has leveraged AI-powered EDA tools to assist semiconductor firms in designing next-generation accelerators. This integration of AI into chip design itself closes the feedback loop — AI helping to create better hardware for AI workloads.

Global and Regional Analysis — Government Initiatives and Policies

North America (United States)

The U.S. leads in AI infrastructure investment, driven by both private enterprise and federal initiatives. National AI strategies emphasize securing semiconductor supply chains, developing frontier AI research clusters, and incentivizing data-center expansion. Regulatory efforts are focusing on responsible AI development while ensuring that innovation remains competitive. This combination of policy support and market dynamism keeps the U.S. at the forefront of global AI infrastructure.

Europe (European Union)

The European Union’s AI strategy combines regulation with heavy investment. The AI Act establishes a risk-based framework for ethical and transparent AI systems, influencing how infrastructure is designed and governed. Simultaneously, the EU is funding major AI supercomputing projects under its Digital Europe Programme. These dual approaches — governance and capacity building — aim to position Europe as a leader in safe and sovereign AI.

Asia

- China: The Chinese government is making large state-backed investments in AI data centers, accelerator production, and national supercomputing hubs. Emphasis is placed on developing domestic GPU alternatives and maintaining control over critical AI infrastructure.

- India: India is crafting a national AI strategy focused on democratizing access, developing local talent, and establishing standards for AI governance. Public-private partnerships are forming to create shared compute facilities for startups, research, and government applications.

- Japan and South Korea: Both nations are prioritizing industrial AI and robotics, funding AI research infrastructure, and encouraging collaborations between chip manufacturers and software firms.

Together, Asia’s initiatives reveal diverse strategies — China’s scale and self-reliance, India’s open innovation and governance approach, and Japan/Korea’s industrial specialization.

Middle East, Latin America, and Africa

These regions are emerging players in the AI infrastructure ecosystem. The Middle East, particularly the UAE and Saudi Arabia, is investing in national AI centers and hyperscale data centers as part of their digital transformation plans. Latin American nations are partnering with global cloud providers to enhance AI research access, while African countries are focusing on local data sovereignty and AI-for-development initiatives. Though smaller in scale, these efforts reflect a growing global awareness of AI infrastructure’s strategic importance.

Key Takeaways for Organizations

- Align Infrastructure with Workload Needs: Distinguish between training, fine-tuning, and inference workloads to optimize hardware and cost efficiency.

- Adopt Hybrid and Multi-Cloud Strategies: Use cloud for elastic scalability while maintaining sensitive workloads on-premises or at the edge.

- Prioritize Governance and Compliance: Build observability and traceability into AI systems to meet regulatory and ethical standards.

- Monitor Chip and Hardware Roadmaps: Upcoming advances in GPUs, AI accelerators, and interconnect technologies will directly influence performance and cost structures.

- Invest in Integrated Stacks: Combining compute, storage, and orchestration platforms shortens deployment timelines and simplifies maintenance.

To Get Detailed Overview, Contact Us: https://www.cervicornconsulting.com/contact-us

Read Report: Lab-on-a-Chip Market Growth Drivers, Trends, Key Players and Regional Insights by 2034